强交互数据分析编程esProc SPL

数据分析编程?

看起来简单,其实很难

例简单查询

select id, name from T where id = 1

select area,sum(amount) from T group by area

例每支股票最长连续上涨天数

SELECT CODE, MAX(con_rise) AS longest_up_days

FROM (

SELECT CODE, COUNT(*) AS con_rise

FROM (

SELECT CODE, DT,

SUM(updown_flag) OVER (PARTITION BY CODE ORDER BY CODE, DT) AS no_up_days

FROM (

SELECT CODE, DT,

CASE WHEN CL > LAG(CL) OVER (PARTITION BY CODE ORDER BY CODE, DT) THEN 0

ELSE 1 END AS updown_flag

FROM stock

)

)

GROUP BY CODE, no_up_days

)

GROUP BY CODE

例1分钟内连续得分3次的球员

例每7天中连续三天活跃的用户数

例每天新用户的次日留存

例股价高于前后5天时当天的涨幅

例...

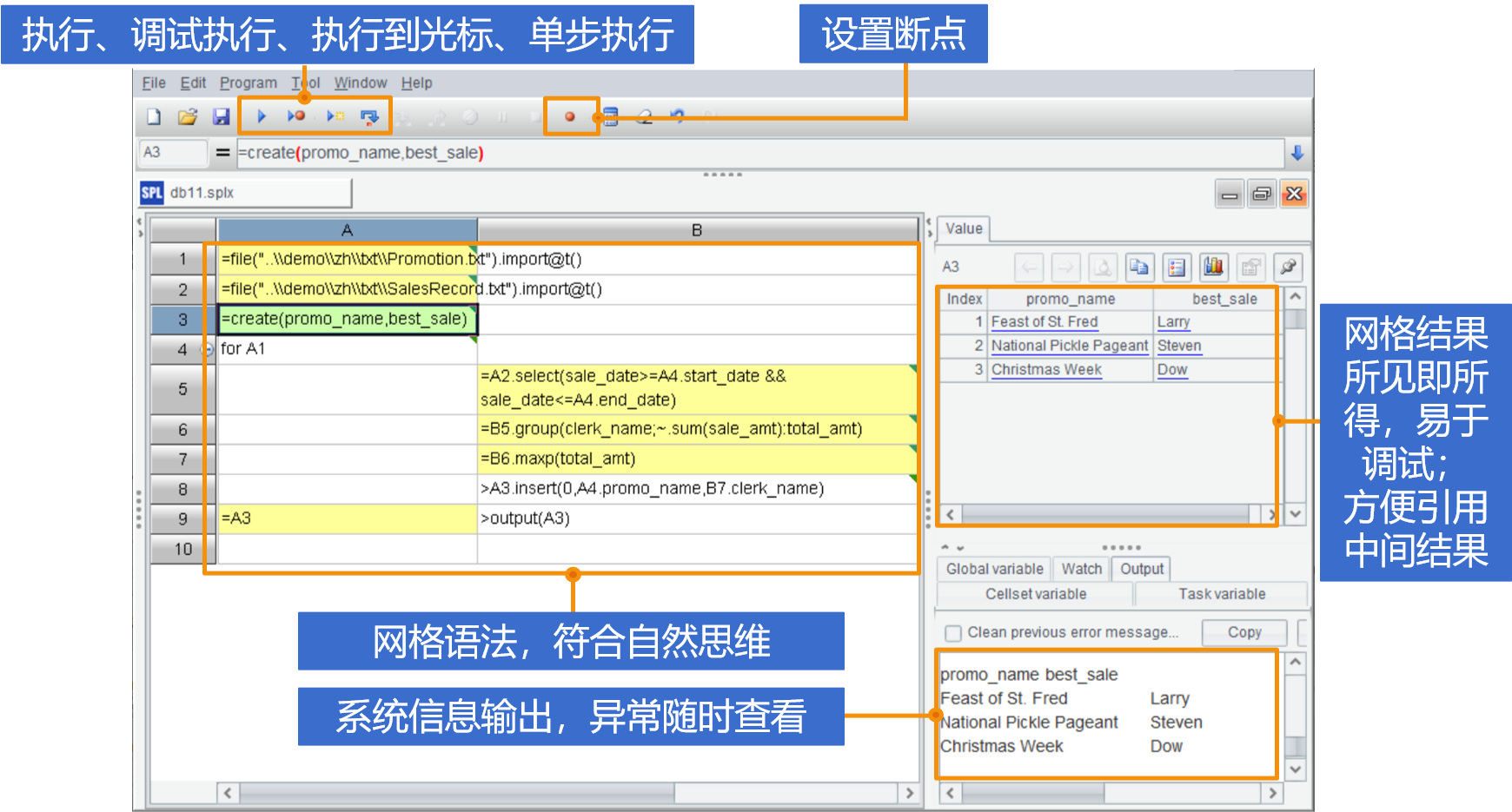

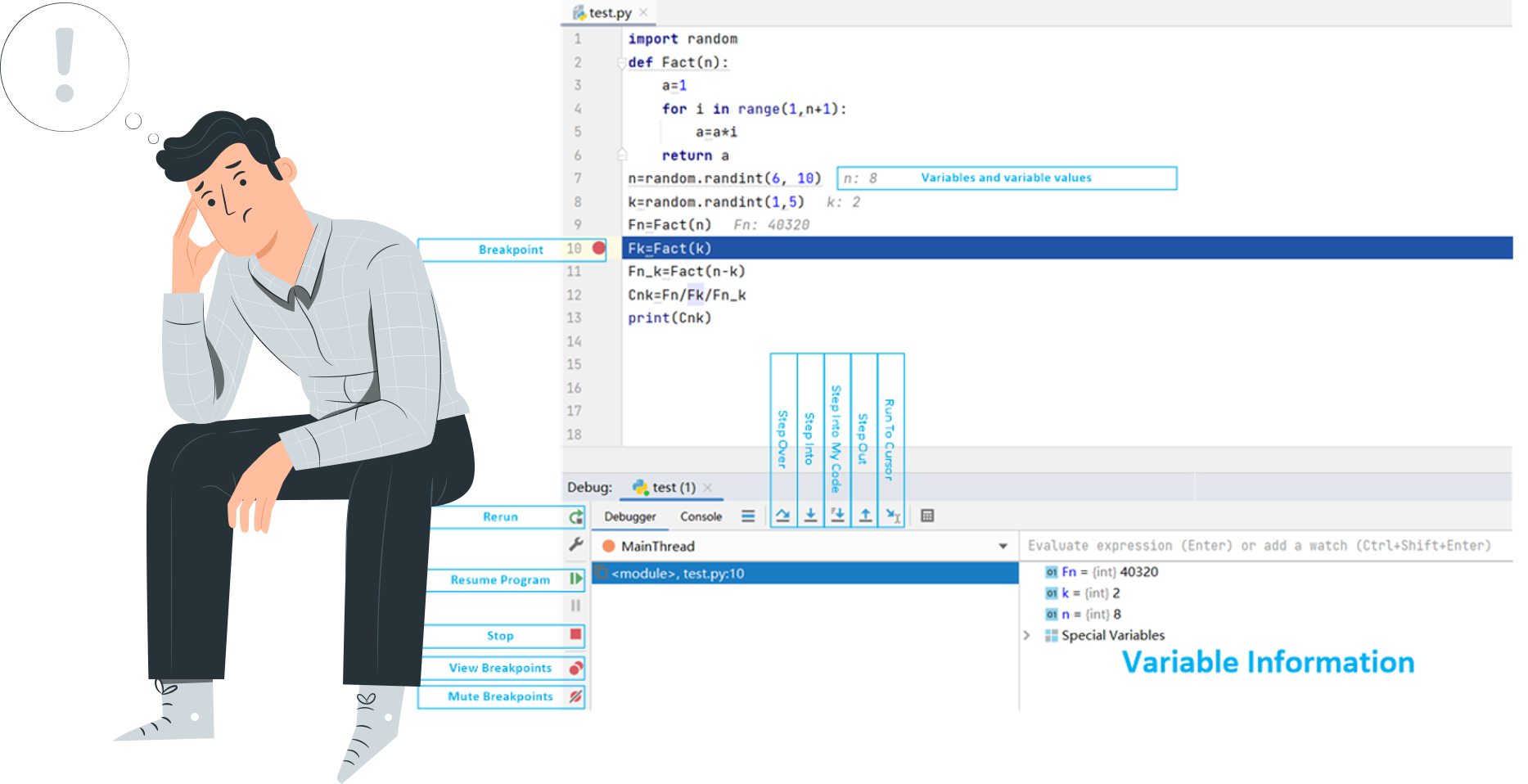

调试很麻烦

SQL没有设置断点、单步执行这些很常见的调试方法,嵌套多层时就要逐层拆分执行。

SELECT CODE, MAX(con_rise) AS longest_up_days

FROM (

SELECT CODE, COUNT(*) AS con_rise

FROM (

SELECT CODE, DT,

SUM(updown_flag) OVER (PARTITION BY CODE ORDER BY CODE, DT) AS no_up_days

FROM (

SELECT CODE, DT,

CASE WHEN CL > LAG(CL) OVER (PARTITION BY CODE ORDER BY CODE, DT) THEN 0

ELSE 1 END AS updown_flag

FROM stock

)

)

GROUP BY CODE, no_up_days

)

GROUP BY CODE

封闭沉重,环境复杂,外部数据要先入库,琐事多

6大优势助力数据分析师

1更简洁的代码

例每支股票最长连续上涨天数

SPL显得更简单,不再需要循环语句

SQL

SELECT CODE, MAX(con_rise) AS longest_up_days

FROM (

SELECT CODE, COUNT(*) AS con_rise

FROM (

SELECT CODE, DT,

SUM(updown_flag) OVER (PARTITION BY CODE ORDER BY CODE, DT) AS no_up_days

FROM (

SELECT CODE, DT,

CASE WHEN CL > LAG(CL) OVER (PARTITION BY CODE ORDER BY CODE, DT) THEN 0

ELSE 1 END AS updown_flag

FROM stock

)

)

GROUP BY CODE, no_up_days

)

GROUP BY CODE

SPL

| A | |

| 1 | =stock.sort(StockRecords.txt) |

| 2 | =T(A1).sort(DT) |

| 3 | =A2.group(CODE;~.group@i(CL< CL[-1]).max(~.len()):max_increase_days) |

Python

import pandas as pd

stock_file = "StockRecords.txt"

stock_info = pd.read_csv(stock_file,sep="\t")

stock_info.sort_values(by=['CODE','DT'],inplace=True)

stock_group = stock_info.groupby(by='CODE')

stock_info['label'] = stock_info.groupby('CODE')['CL'].diff().fillna(0).le(0).astype(int).cumsum()

max_increase_days = {}

for code, group in stock_info.groupby('CODE'):

max_increase_days[code] = group.groupby('label').size().max() – 1

max_rise_df = pd.DataFrame(list(max_increase_days.items()), columns=['CODE', 'max_increase_days'])

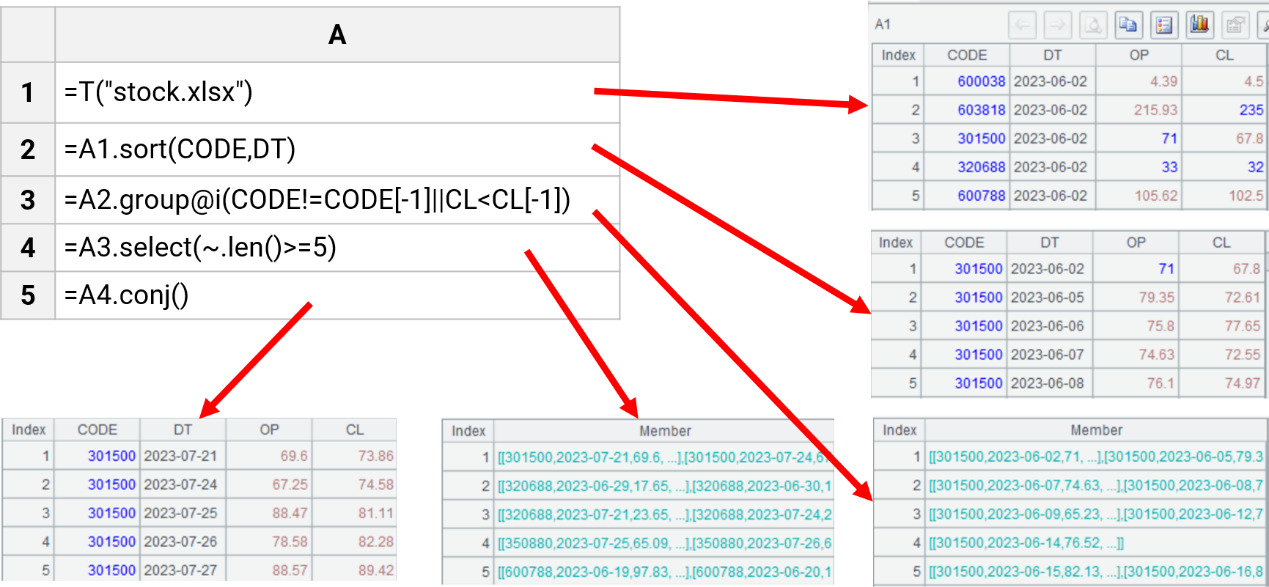

2强交互探索分析,便捷调试

例找出股票连涨超过5天的区间

3内置并行外存运算,跑出高性能

内存计算

| A | |

| 1 | smallData.txt |

| 2 | =file(A1).import@t() |

| 3 | =A2.groups(state;sum(amount):amount) |

外存计算

| A | |

| 1 | bigData.txt |

| 2 | =file(A1).cursor@t() |

| 3 | =A2.groups(state;sum(amount):amount) |

串行

| A | |

| 1 | bigData.txt |

| 2 | =file(A1).cursor@t() |

| 3 | =A2.groups(state;sum(amount):amount) |

并行

| A | |

| 1 | bigData.txt |

| 2 | =file(A1).cursor@tm() |

| 3 | =A2.groups(state;sum(amount):amount) |

4轻量便捷

普通笔记本即可流畅运行,无须服务器集群

高压缩率文件存储数据,易于携带

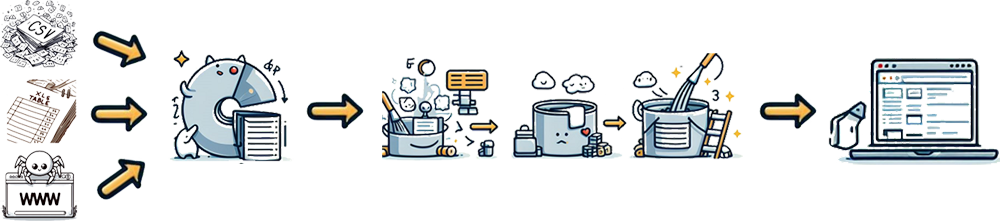

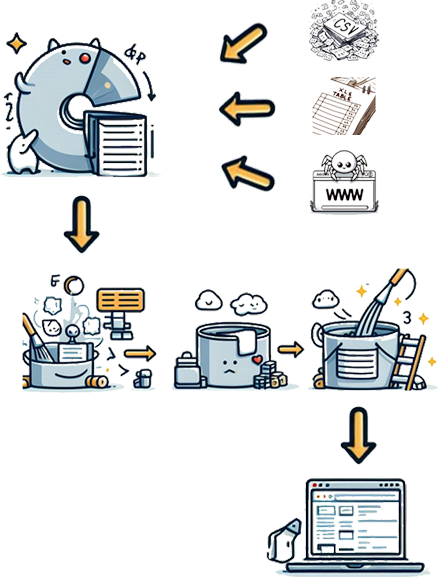

5开放体系,多样数据源直接算

6纯Java,探索结果直接进企业应用

比SQL好点,复杂情况还是麻烦

例每支股票最长连续上涨天数

import pandas as pd

stock_file = "StockRecords.txt"

stock_info = pd.read_csv(stock_file,sep="\t")

stock_info.sort_values(by=['CODE','DT'],inplace=True)

stock_group = stock_info.groupby(by='CODE')

stock_info['label'] = stock_info.groupby('CODE')['CL'].diff().fillna(0).le(0).astype(int).cumsum()

max_increase_days = {}

for code, group in stock_info.groupby('CODE'):

max_increase_days[code] = group.groupby('label').size().max() – 1

max_rise_df = pd.DataFrame(list(max_increase_days.items()), columns=['CODE', 'max_increase_days'])

调试还是print大法

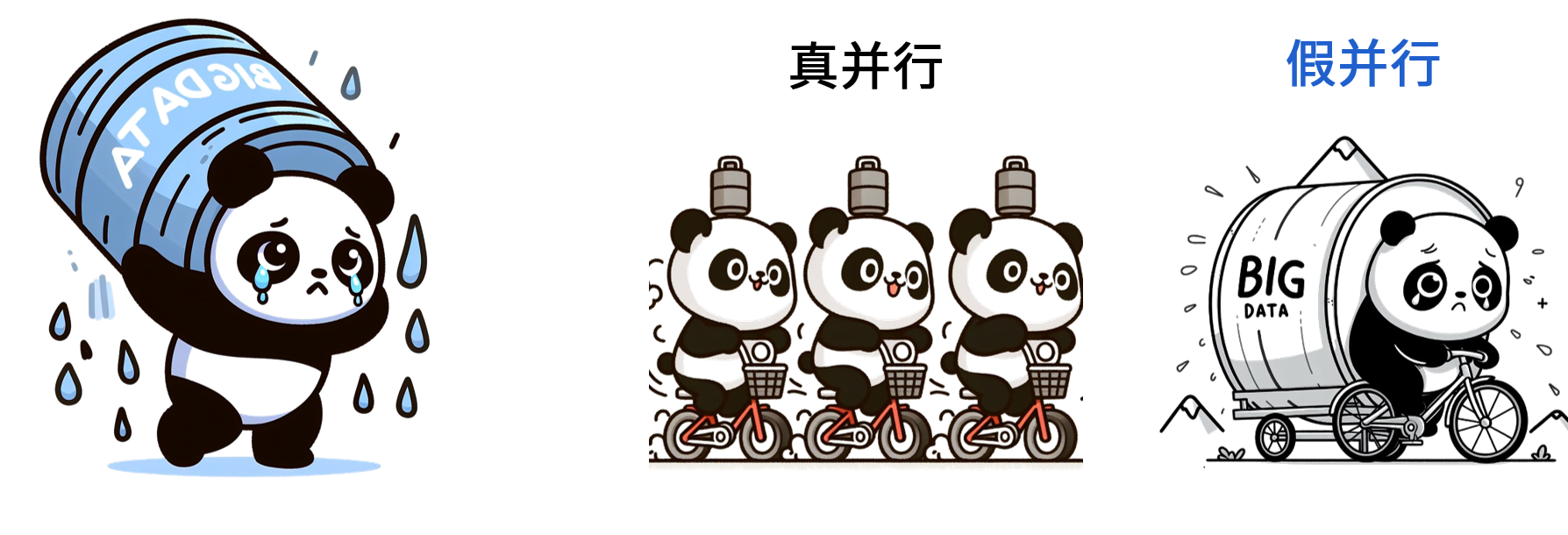

没大数据支持,伪并行

非Java体系,探索结果进企业应用经常还要重写